Custos AI: The Evolving Framework – A Comprehensive Podcast Overview

From Real-Time Monitoring to Predictive Governance: The Tools of an AI-Augmented Ethical Framework

Dear readers,

It is with great enthusiasm that I return today to discuss Custos AI, a concept I began developing and exploring with you on these pages some time ago. As you may recall, since the initial article introducing the "Ethical Hawk-Eye," my ambition has always been to address the eternal question: Quis custodiet ipsos custodes? – Who watches the human decision-makers, those vested with authority, especially when they wield powerful AI tools?

Over a series of seven previous articles, I progressively built and detailed the various pillars of this framework, exploring ideas such as the "Ethical Hawk-Eye" itself, its operationalization, its dialogue with visions for safe AGI, the crucial Shepherd Algorithms, the Directive Normative Ethical Architrave (AEND), and even the integration of preventive chaotic intelligence.

After this journey of exploration and definition, I felt the need to consolidate and systematise the current, evolved vision of the Custos AI framework into a comprehensive presentation. It's essential to note that, as a work in progress, Custos AI has undergone refinements based on further reflection and valuable interactions. Some initial concepts have been modified or even set aside, while new implementations and details have emerged.

To make this updated and more complete architecture as accessible as possible, I am delighted to announce the release of a podcast episode. It has been generated with the incredible AI technology of Google–NotebookLM and offers a concise overview of the key points of Custos AI for an essential understanding of the entire system

It's worth noting that individual components of Custos AI, such as Shepherd Algorithms for real-time ethical monitoring or Preventive Chaotic Intelligence for risk assessment, can be implemented as standalone tools, independent of the complete framework.

CUSTOS AI - Ethical Support Ecosystem for Human Decisions in the Age of AI

Navigating ethical challenges with responsibility and vision, empowering human judgment.

FUNDAMENTAL PRINCIPLE

Custos AI is an institutional framework designed to ethically support human decision-making processes, especially when these involve complex issues or leverage advanced analytical tools (including AI). It is not a super-AI controlling other AIs, but a mechanism that helps institutions ensure that decisions made by human beings are aligned with fundamental values, responsible, and transparent. AI, if used, is a tool whose impact on human decisions can be subject to analysis.

1. CONTEXT: AI – OPPORTUNITIES AND ETHICAL QUESTIONS FOR HUMAN DECISIONS

AI as a Powerful Tool: Artificial Intelligence (AI) offers significant potential, but its application in supporting critical human decisions (economy, healthcare, justice) raises profound ethical concerns.

Ethical Challenges for Human Decision-Makers:

Alignment with Values: How can we ensure that human decisions, even when informed by AI, respect equity, non-discrimination, and privacy?

Human Accountability: If a human decision, influenced by flawed or opaque AI analysis, causes harm, who is responsible? How can decision-makers understand and justify the basis of their choices if they rely on "black boxes"?

Guiding Question: "Quis custodiet ipsos custodes?" – Who ensures the ethical use of tools (including AI) by human decision-makers?

Custos AI Proposal: A multi-level ecosystem for the support and ethical governance of human decisions.

2. CUSTOS AI (MACRO) - THE "ETHICAL HAWK-EYE" FOR THE INSTITUTIONAL DECISION-MAKING PROCESS

"ETHICAL CHALLENGE" Concerning Decisions and Tools Used:

Hawk-Eye Analogy: Just as a challenge in tennis verifies an umpire's call, an "Ethical Challenge" can be requested by Qualified Public Bodies (Parliaments, Courts, Ministries, Guarantors, Ombudsmen) when well-founded and substantiated doubts arise about the ethics of a human decision in preparation or already made, or about the ethical/normative correctness of a specific AI system used (or proposed for use) in supporting such a decision.

Activators and Logic: Only Qualified Public Bodies to ensure seriousness and public relevance, with a formal and rigorous procedure.

CONDITIONS FOR THE "ETHICAL CHALLENGE":

When: Well-founded doubts about potential violation of norms (e.g., AI Act) or fundamental ethical principles (contained in the AEND - Directive Normative Ethical Architrave) by a human decision or an AI system that influences/will influence it.

How: Formal request to the OAEC - Office for Algorithmic Ethical Compliance, detailing:

The human decision in question and/or the specific AI system (if the doubt directly concerns it).

A description of the alleged ethical problem/violation and impacts.

Commitment to provide access to relevant data (for the AI, if it is subject to analysis).

AEND principles against which verification is requested.

3. "ETHICAL CHALLENGE" PROCESS (MACRO) - PHASE 1: FORMAL ACTIVATION

Step 1: Reception by the OAEC (Office for Algorithmic Ethical Compliance):

An independent, multidisciplinary body, guarantor of impartiality. Receives the "Ethical Challenge" request.

Step 2: Formal Validation by the OAEC:

Verifies origin, completeness, substantiation, and relevance to Custos AI's mandate.

If validated, the "Ethical Challenge" is accepted, and the investigation begins.

4. "ETHICAL CHALLENGE" PROCESS (MACRO) - PHASE 2: THE OAEC'S IN-DEPTH INVESTIGATION

Step 3: The OAEC Guides the Multidisciplinary Analysis (analysts, jurists, ethicists).

Key Tools and Frameworks for Analysis:

AEND (Directive Normative Ethical Architrave): The reference "Ethical Constitution" (laws, technical standards like IEEE 7003 and ISO/IEC 23894, FATE - Fairness, Accountability, Transparency, Ethics - principles). This is the yardstick against which human decision-making options are evaluated and, if relevant, the operation of the supporting AI system.

SHEPHERD ALGORITHMS (if the supporting AI system is equipped with them):

"Ethical guardians in the code," legally mandated software components integrated into critical AI systems.

They monitor the "host" AI against the AEND, attempt corrections, and if an "Ethical Decision Crash" (severe violation) occurs, they limit the AI and generate an EDCR - Ethical Decision Crash Recording (an internal ethical "black box" for the AI).

Role in OAEC's Investigation: EDCRs become objective primary evidence of the internal behavior of the AI system that supported (or is intended to support) the human decision, if this is the specific subject of doubt. They allow for "deciphering the AI's recorded ethical history," helping the OAEC understand if the AI operated correctly according to the AEND.

PREVENTIVE CHAOTIC INTELLIGENCE (Extended Chaotic Risk Analysis, if complexity warrants):

For human decisions (or AI systems supporting them) with potential complex and novel systemic impacts.

The specific situation is taken as a "seed," and N micro-variations ("fractal-like branches") are generated.

Flash simulations examine how these variations would impact the outcome of human decision-making or the AI's behaviour, pushing towards AEND limits.

Identifies:

"Negative Attractors": Tendencies towards ethically problematic outcomes (systemic discrimination, disinformation, rights violations).

"Infinities of Chaos" (of different "perceived cardinality"): Denser or more probable classes of risk.

Purpose ("Ethical Forward Defence"): Predictive analysis to anticipate and prevent an entire class of potential errors or negative consequences of human decision-making options, "stealing time and space" from negative developments. Provides insights for more resilient policies.

TARIFFS EXAMPLE: The OAEC uses Preventive Chaotic Intelligence to simulate how different human response options to the tariffs (e.g., various countermeasures or negotiation strategies, perhaps suggested by economic models) could lead to "negative attractors" (e.g., unexpected crises in vulnerable sectors, escalation of retaliations with disproportionate social impacts).

PREVENTIVE CHAOTIC INTELLIGENCE (ICP)

(Extended Chaotic Risk Analysis, activated when complexity warrants)

When we are confronted with human decisions or with supporting AI systems, whose potential impact is profoundly systemic, novel, and intrinsically complex, linear risk assessment methods often reveal their limitations. This is where Preventive Chaotic Intelligence (ICP) comes in, as a crucial analytical tool within the arsenal of the OAEC (Office for Algorithmic Ethical Compliance). This methodology does not seek to predict a single future, but to map the "fertile chaos of possibilities" – that vast landscape of potential outcomes that already exist latently within the fabric of a complex situation, yet elude a purely deterministic view.

ICP operates by starting from a "seed scenario": the specific situation or human decision under examination, or the AI system proposed for analysis. From this seed, through rigorous computational and conceptual exploration, a large number (N) of micro-variations are generated. Imagine them as the innumerable "fractal-like ramifications" of an event, where every small alteration in initial conditions, implicit assumptions, or possible interactions between agents can open up a new trajectory. The goal is to compel thinking (both human and, if used in support, that of analytical AI) to move beyond the "supermarket aisles" of conventional reasoning.

These myriad variations are not mere flights of fancy; they become the input for what we can call "flash simulations" or deep sensitivity analyses. ICP examines how each of these "ripples" in the fabric of the seed scenario could drastically influence the outcome of the human decision or the behaviour of the AI system, pushing it towards the limits (or beyond) of the Directive Normative Ethical Keystone (AEND) and current laws.

Through this immersion in complexity, Preventive Chaotic Intelligence aims to identify and make visible:

"Negative Attractors": These are not isolated, single errors, but genuine dynamic tendencies or "basins of attraction" within the possibility space. These represent configurations of events or system behaviours (human and/or AI) that converge with high probability towards ethically problematic outcomes such as hard-to-detect systemic discrimination, viral amplification of disinformation, widespread and subtle violations of fundamental rights, or even existential risks whose genesis was entirely unforeseeable with linear approaches.

"Infinities of Chaos" (of different "perceived cardinality"): Metaphorically inspired by the Cantorian concept of infinities of different sizes, ICP attempts to distinguish between the various classes of risk that emerge. Some "negative attractors" or families of problematic scenarios may be "denser," meaning they represent a set of negative possibilities that are more easily accessible, have a broader spillover potential, or possess a qualitatively superior "magnitude" of harm compared to others. Understanding this "geometry of risk" is crucial.

The intrinsic purpose of ICP is to implement a genuine "Ethical Forward Defence." It is a profoundly predictive analysis in the sense that it aims to anticipate and, ideally, prevent not only single errors but entire classes of potential ethical failures or negative consequences linked to human decision-making options and the AI systems that support them. ICP seeks to "steal time and space" from the development of negative trajectories, providing crucial and often counterintuitive insights that can lead to governance policies and AI system designs that are intrinsically more resilient, adaptive, and wise in the face of the inescapable complexity of the real world.

Crucially, ICP is not a static analytical tool; it is a self-learning system. Each Extended Chaotic Risk Analysis, every set of "flash simulations," and all identified "Negative Attractors" and "Infinities of Chaos" are not merely outputs. They become vital inputs that feed back into ICP's core models. This continuous feedback loop allows ICP to refine its ability to recognise emerging patterns of risk, to become more sensitive to the subtle "seeds" of potential negative outcomes, and to enhance the accuracy of its "topographies of possibility." In essence, ICP learns from the simulated futures it explores, making its "Ethical Forward Defence" increasingly robust and prescient over time. This self-improvement mechanism ensures that the insights provided to the OAEC, and subsequently used to inform the AEND, AERNs (Adaptive Ethical Risk Norms), and the CEA (Central Ethical Archive), are always at the cutting edge of risk anticipation. The system doesn't just map chaos; it learns from it, evolving its capacity to guide us through ever more complex scenarios.

EXAMPLE ON TARIFFS: The OAEC, by employing ICP, could go beyond standard econometric analysis. It would simulate how various human response options to tariffs (e.g., a wide range of possible countermeasures, subtle negotiation strategies, or even the impact of concomitant exogenous shocks – such as a pandemic or an energy crisis – on decision-making models, perhaps suggested by sophisticated AI economic models) could, by interacting with each other and with global variables, converge towards "negative attractors." These could include entirely unexpected crises in seemingly unrelated sectors that are highly vulnerable to second or third-order effects, an escalation of international retaliations with disproportionate and hard-to-predict social impacts on the most vulnerable groups, or the collapse of confidence in specific markets. ICP analysis, therefore, does not limit itself to predicting an effect, but maps the "topography of possibilities" in which certain catastrophic effects become not only possible but, given certain dynamics, almost inevitable if preventive action is not taken on the "seeds" that generate them. Furthermore, the very act of simulating these tariff scenarios would refine ICP's understanding of economic shock propagation, making future analyses in similar domains even more acute.

5. "ETHICAL CHALLENGE" PROCESS (MACRO) - PHASE 3: FINAL REPORTS AND SUPPORT FOR HUMAN DECISION

Step 4: The OAEC Drafts Concluding Reports:

REPORT 1: ETHICAL COMPLIANCE ANALYSIS (based on objective reality):

Focused on the specific human decision under review and/or the supporting AI system subject to the Challenge.

Assesses compliance with AEND (including data from any EDCRs if a specific AI is under scrutiny).

Identifies causes of non-compliance.

Specific Operational Recommendations: To correct ethical problems in the human decision or supporting AI system and mitigate damages.

TARIFFS EXAMPLE (if the doubt concerns an AI proposing strategies): "Analysis of 'StrategyBot' AI's EDCRs and tests vs. IEEE 7003 (part of AEND) show a bias in suggesting countermeasures that unfairly penalize SMEs in sector Z. It is recommended to recalibrate 'StrategyBot's' decision weights or not to consider its outputs for this specific sector without further critical human evaluation."

REPORT 2: PREDICTIVE ANALYSIS (from Preventive Chaotic Intelligence, if activated):

Presents the "chaos map" generated for the human decision-making options under review (e.g., for different tariff response strategies).

Describes extreme but plausible scenarios and systemic vulnerabilities.

Preventive Strategic Recommendations: To "sterilise" vulnerabilities, make policies more ethically resilient, and avoid future "negative attractors."

TARIFFS EXAMPLE: "The chaotic analysis of tariff response options revealed a 'negative attractor': the 'Aggressive Global Countermeasures' option, in scenarios of concurrent economic crisis, could lead to an export collapse for the poorest agricultural regions (violating AEND's principle of territorial equity). It is recommended to discard this option or to integrate preventive compensation mechanisms for such regions, as in simulated scenario B."

Step 5: The Human Authority Makes the Sovereign Final Decision:

The OAEC's Reports provide human decision-makers (Parliament, Ministry, etc.) with significantly more robust knowledge elements.

Custos AI does not replace but empowers human judgment for more informed, aware, and responsible decisions.

6. CUSTOS AI (MACRO) - PHASE 4: INSTITUTIONAL LEARNING AND ACTIVE PROMOTION OF ETHICS

Step 6: Capitalising on Knowledge:

Investigation results (OAEC reports, anonymised EDCRs) become collective knowledge, channelled to NATIONAL OBSERVATORIES.

National Observatories: Manage AERN - National Ethical Reference Archives, collect EDCRs and local Ethical Challenge reports, monitor compliance, and can request corrections or propose sanctions for non-compliant AI systems.

Step 7: Global Harmonisation and AEND Evolution (Role of SMO-AI and OAEC):

Aggregated data from AERNs flows into the CEA - Central Ethical Archive, managed by the SMO-AI - Supranational Mother Observatory.

SMO-AI: Global strategic analyses, standards harmonisation, ethical R&D directives, identifies AEND update needs.

OAEC: Conveys AEND update proposals to institutional channels.

Feedback loop (Shepherd -> AERN -> CEA -> SMO-AI -> OAEC -> AEND) for an adaptive system.

Step 8: Actively Promoting Ethics (Beyond Control):

Incentives: "Voluntary AI Ethical Excellence Certifications" (for Shepherd implementation, "ethics-by-design" processes, few EDCRs).

International Calls: To continuously improve Shepherd Algorithms.

"Algorithm Design for Good" Culture: Promote "ethics-by-design," supported by possible dedicated bodies for "Ethical Algorithm Design" working with SMO-AI/OAEC to translate AEND into practical guidelines.

7. "CUSTOS AI MINI" (INSTITUTIONAL ETHICAL PILOT) - AI ETHICS INTEGRATED INTO DAILY INSTITUTIONAL LIFE

What it is: A specialised AI tool for officials, legislators, etc., for daily ethical support in their work and decision-making processes.

Main Purpose:

To draft documents/regulations/policies proactively considering AEND.

Preliminary ethical risk analysis of human projects/initiatives.

To assess the potential ethical impact of different human policy options.

How it Works (Custos AI Principles "One-to-One"):

Shepherd Algorithms Integrated into "Custos AI Mini": Verify in real-time the output generated by the "Custos AI Mini" assistant (or user drafts) against AEND, flagging potential issues.

"Local" Preventive Chaotic Intelligence in "Custos AI Mini": Performs mini-chaotic analyses on the user's specific request/draft to identify ethically problematic "butterfly effects" of human proposals or decisions being developed.

Not Replacement, but Empowerment of Human Judgment:

"Custos AI Mini" is not "ethically sentient." It is trained to adhere to AEND and identify problematic patterns. It helps humans overcome limitations (consistency with rules, consequence prediction, bias).

Expected Benefits:

Active, widespread, "by design" ethical prevention in human decision-making processes.

Empowerment and continuous ethical training for public operators.

Resource optimisation (less burden on Custos AI Macro).

Feeding the Macro System with high-quality operational data (issues, solutions, risk patterns identified in daily use by decision-makers).

8. CUSTOS AI & "CUSTOS AI MINI" - TOGETHER FOR A RESPONSIBLE FUTURE (Ethically Founded Human Decisions)

Integrated Vision:

CUSTOS AI (MACRO): Ethical support of last resort and strategic oversight for high-impact public human decisions; AEND evolution engine.

"CUSTOS AI MINI" (Ethical Pilot): Proactive and proximate ethical support tool for those making decisions within institutions, facilitating "ethics by design" in daily work.

Fundamental Synergy: Holistic approach to promote a widespread culture of ethical responsibility in human decision-making processes.

Primacy of Informed and Responsible Human Judgment: Both systems are designed to empower, inform, and support the critical judgment and final responsibility of human beings, never to replace or usurp them.

Shared Ultimate Goal: A future where technologies (including AI) are used to make human decisions that are safe, fair, and effective, fully serving individual and collective well-being, and firmly anchored to the fundamental values of justice, freedom, and dignity that characterise our democratic and open societies.

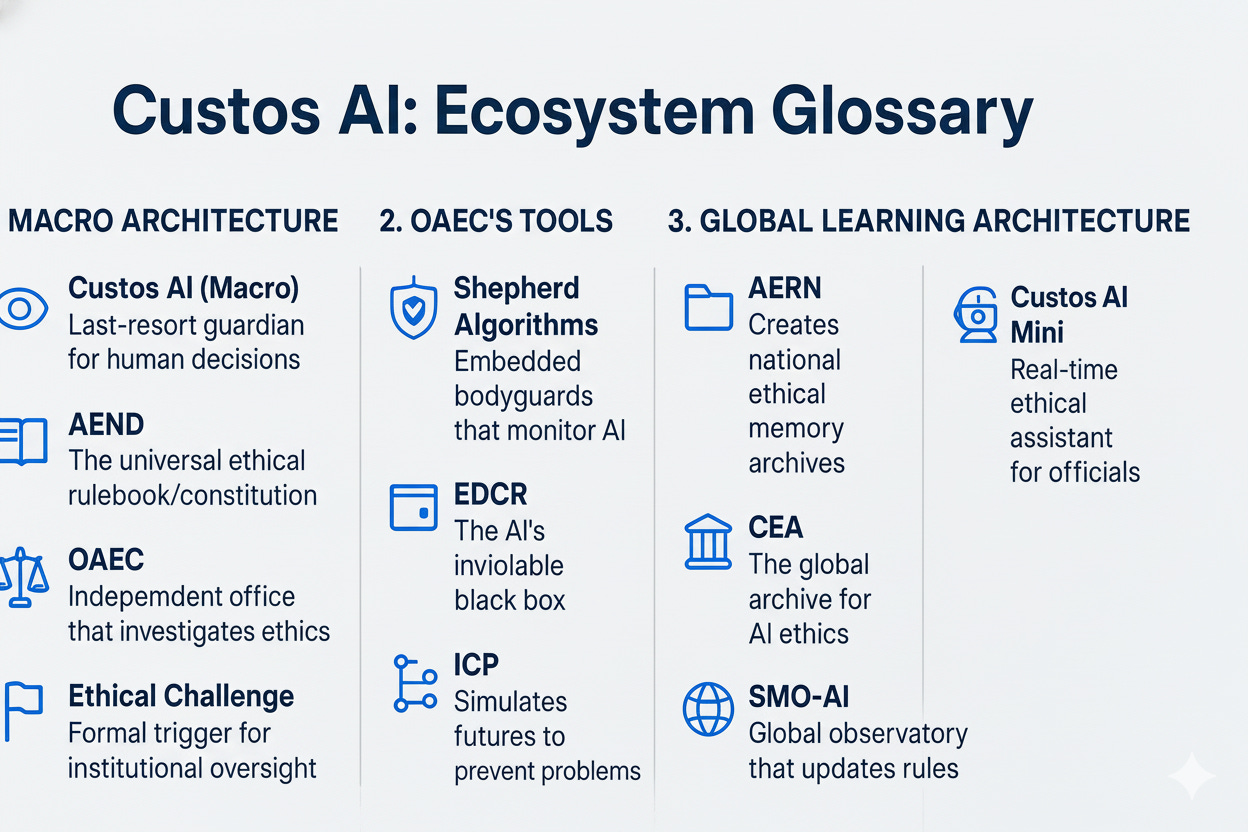

The Complete Custos AI Glossary: Who is Who and What They Do

PART 1: THE “MACRO” ARCHITECTURE (The Central System)

Custos AI (Macro): The System’s Guardian

What is it? It is the entire “last-resort” control system. Think of it as a sort of Constitutional Court or a Guarantor Authority for the most important ethical decisions of a country or institution.

What does it do? It activates only for high-impact issues and only when called upon. Its purpose is to watch over the ethics of the most critical human decisions.

AEND (Directive Normative Ethical Architrave): The Ethical Constitution

What is it? The “Sacred Book” of rules. It is a document that combines existing laws (e.g., European AI Act), technical standards (e.g., ISO), and fundamental philosophical principles (justice, fairness, privacy).

What does it do? It is the universal yardstick for the entire ecosystem. Everything, from a minister’s decision to an AI’s output, is measured against the AEND to see if it is ethically “compliant.”

OAEC (Office for Algorithmic Ethical Compliance): The Investigating Office

What is it? The “Arbiter’s” office. It is a team of independent super-experts (technicians, lawyers, philosophers) who answer to no one but the law and ethics.

What does it do? It is the operational arm of Custos AI. When it receives a request for a “VAR” (an “Ethical Challenge”), the OAEC conducts the investigation to uncover the truth. It is the detective.

Ethical Challenge: The Ethical “VAR”

What is it? The formal request that a qualified public body (e.g., a Parliament, a Ministry, a Guarantor) can make to the OAEC when it has a well-founded doubt about the ethics of a decision or an AI.

What does it do? It triggers the entire control process. Without an “Ethical Challenge,” the OAEC does not act.

PART 2: THE OAEC’s TOOLS (The Detectives’ Superpowers)

Shepherd Algorithms: The “Bodyguards” in the Code

What are they? Mandatory software components installed inside “critical” AIs. They are not the AI, but they monitor it from within.

What do they do? They check that the “host” AI respects the AEND’s rules. If the AI is about to commit a serious violation, the “bodyguard” intervenes, blocks it, and records everything.

EDCR (Ethical Decision Crash Recording): The AI’s “Black Box”

What is it? It is the secret and inviolable log file created by a Shepherd Algorithm when it detects a serious violation.

What does it do? It provides the OAEC with objective proof of “what the AI thought” and “what it was about to do.” It is the key evidence for the investigation.

Preventive Chaotic Intelligence (ICP): The Future Simulator

What is it? A highly advanced “what-if” engine, a simulator that uses Chaos Theory.

What does it do? It analyzes a decision and explores thousands of possible consequences, even the most unpredictable ones, to find the “downhill paths” to disaster (the “Negative Attractors”). It is used for predictive defense: preventing problems, not just fixing them.

PART 3: THE GLOBAL LEARNING ARCHITECTURE (The “Collective Brain”)

AERN (National Ethical Reference Archives): The National Archives

What are they? They are the national databases where all the data from a country’s OAEC investigations (reports, anonymized EDCRs, etc.) are collected.

What do they do? They create a national historical memory of the ethical problems encountered, allowing a country’s system to learn from its mistakes.

CEA (Central Ethical Archive): The “Library of Alexandria” of AI Ethics

What is it? The central and global archive where data from all national AERNs converge.

What does it do? It is the world’s largest database on the ethical failures and successes of AI. It allows for the identification of patterns and risks on a planetary level.

SMO-AI (Supranasional Mother Observatory): The Global Observatory

What is it? A supranational organization (think of a kind of “CERN” for AI ethics) that manages the Central Archive (CEA).

What does it do? It analyzes global data to identify worldwide risk trends, harmonize standards, and, most importantly, propose updates to the “Ethical Constitution” (AEND) to keep it always up to date with new threats.

PART 4: DAILY PREVENTION (Ethics “at Hand”)

Custos AI Mini: The “Guardian Angel” on the Desk

What is it? An application, a specialized AI assistant for every official, legislator, or public decision-maker.

What does it do? While a person is working (writing a law, preparing a budget), “Custos AI Mini” assists them in real-time by suggesting more ethical formulations, flagging potential violations of the AEND, and running mini-simulations (using a “local” ICP) to show the possible ripple effects of a decision. It is ethics made practical and daily.

Before concluding, I wish to share something from the past ten days I've lived through, a deeply personal experience that has unexpectedly resonated with my work on Custos AI.

This period has been one of extraordinary intensity for my city. After being electric with anticipation, on May 23, 2025, Napoli, my city's team, secured its fourth Scudetto in its history (winning the Italian football championship). The ensuing explosion of joy that followed was immense.

The palpable love and beauty enveloping Napoli, culminating in the grand official celebrations on the seafront on May 26, 2025, where hundreds of thousands gathered to celebrate the team parading on an open-top bus (as you will see in the video that follows) – stirred a profound creative energy within me. This contributed to new ideas and further refinements for the Custos AI framework. These vibrant celebrations, watched in over 170 countries and with the sheer number of people present, were truly a fantastic global showcase for Italy.

Instagram Video by @robertosalomonephotojournalist

Beyond the sheer sporting triumph, what you've witnessed is a potent identity-affirming event. Renowned journalist and writer Roberto Saviano has described Napoli as 'the last of the ancient cities.' This underscores how its unique soul and historico-cultural fabric erupt with such force at moments like these, becoming a collective rite of belonging and pride.

I wanted to share this because it helps to illuminate the cultural and emotional wellspring from which my reflections, including those on Custos AI, draw their vitality.

Thank you immensely for being here, for your curiosity, your time, and your invaluable support. Your engagement is truly vital to this ongoing exploration.

Let's continue this fascinating journey together.

Ad maiora!

With gratitude,

Cristiano

➡️ Discover now ⚖️ My Frameworks & Research 🔍 and Narrative Research

Let’s Build a Bridge.

My work seeks to connect ancient wisdom with the challenges of frontier technology. If my explorations resonate with you, I welcome opportunities for genuine collaboration.

I am available for AI Safety Research, Advisory Roles, Speaking Engagements, Adversarial Red Teaming roles, and Creative Writing commissions for narrative and strategic storytelling projects.

You can reach me at cosmicdancerpodcast@gmail.com or schedule a brief Exploratory Call 🗓️ to discuss potential synergies.