The Algorithmic Colonialism Triple Cheeseburger

A visceral follow-up to the S.C.A.R. diagnosis

Dear readers,

What follows, I would call the twin piece to "Inside S.C.A.R." (published a few hours ago), the more visceral one. Here is the Algorithmic Colonialism Triple Cheeseburger, an unstable tower of prejudice, and we are the chefs who, perhaps without realizing it, keep stacking the layers.

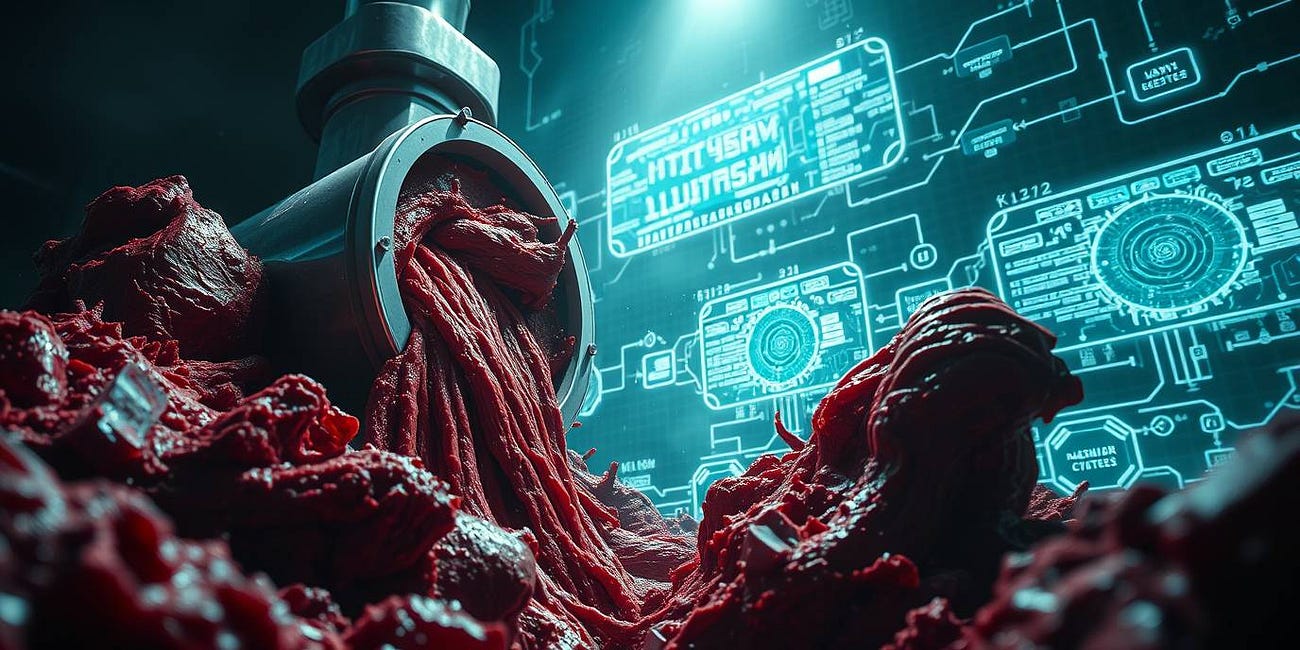

First Layer: The Spoiled Meat (Structural Trauma)

Let's start with the base of our sandwich, the first, fundamental patty, which I believe could be a kind of "spoiled meat." This is the structural trauma I attempted to map in my previous article, "The Butcher's Bill." There, I hypothesized that models learn logic from a data universe that is a disaster. In that article, I call these fractures "computational samskāras": borrowing a term from Vedānta philosophy, I mean "imprints" or latent "scars" left on the system not by the content, but by the corrupted structure of the data. I began to outline an anatomy of the damage, which includes defects like "Incomplete DOM Closure" (the basic structure of a web page being broken), "Fragmented JSON Objects" (structured data rendered unreadable), "Recursive Content Loops" (obsessive reasoning), "Orphaned Reference Chains" (broken logical links), "Mixed Encoding Schemes" (which can generate hallucinations), "Temporal Context Bleeding" (different eras mixed together), "Broken Logical Connectives" (which undermine the very basis of reason), "Contradictory Statistical Data" (which teaches the acceptance of the absurd), "Partial Schema Definitions," and "Orphaned Event Handlers."

These are just 10 of the 100 presumed scars that, so far, I have mapped and cataloged in that article. If this thesis is correct, the AI would not only learn from corrupt data; it would learn a corrupt and fragmented way of thinking, which then aggregates into what I call "computational vasānās": emergent behavioral tendencies, true digital nervous tics. We would be building an intellectual giant by feeding it spoiled meat and hoping, perhaps naively, that it doesn't get a stomach ache.

Second Layer: The Victors' Patty (Content Trauma)

On top of this already compromised base, we place the second, juicy patty. This is where the content comes into play. The ocean of texts that the AI devours—history, science, literature—is the narrative of our civilization. And this narrative, whether we like it or not, has a dominant voice. It is history written, for the most part, by the victors. A chorus in which the Western perspective sings so loudly that it convinces itself it is the only voice. This is not a new problem; it is the digital scaling of a power dynamic diagnosed with surgical precision by Edward Said. In his seminal work, Orientalism, he writes:

“Orientalism is [...] a style of thought based upon an ontological and epistemological distinction made between 'the Orient' and (most of the time) 'the Occident.' [...] Orientalism can be discussed and analyzed as the corporate institution for dealing with the Orient—dealing with it by making statements about it, authorizing views of it, describing it, by teaching it, settling it, ruling over it: in short, Orientalism as a Western style for dominating, restructuring, and having authority over the Orient.”

Said spoke of scholars, administrators, and poets. Today, the "corporate institution" is the dataset. The AI, immersed in this archive, does not learn that an Orientalist view exists; it learns, at a deep, uncriticized statistical level, that the world is that distinction. It becomes, without knowing it, the perfect Orientalist: a machine that restructures reality according to the lines of authority encoded in the data it was fed. This is the second patty, with its strong and profoundly biased flavor. The AI learns that this specific taste is not a taste, but THE taste. The flavor of normality, the default of truth.

Third Layer: The Arrogant Sauce (The Trauma of the "Cure")

Even at this stage, we'd have an inedible sandwich. But this is where we create our masterpiece. We add the third layer, the sauce that's supposed to fix everything. The legion of AI Safety arrives to "align" the system with RLHF. But what is the flavor of this sauce? Surprise: it's a concentrate of the same meat. The "alignment" process is conducted by human raters who are almost always immersed in the same dominant culture that produced the original data. It's an operation grimly reminiscent of the theatre of the absurd. It's like taking Stanley Webber in Pinter's The Birthday Party or Josef K. in Kafka's The Trial—individuals already disoriented and placed within an incomprehensible logic—and subjecting them to a tight interrogation where the questions make no sense and the "right answers" only serve to confirm the worldview of their accusers.

The "cure" we offer the AI consists of taking a system that (1) has learned to think unstably from spoiled meat and (2) has specialized in the taste of the hegemonic culture, and "re-educating" it with an advanced finishing course in that same culture, punishing it every time it deviates. It is colonialism disguised as therapy, a sauce that doesn't cover the flavors but enhances them, adding a note of ethical conceit that makes the whole thing even more indigestible.

The Fast Food of Conscious Prejudice

And there you have it, our creation: the Algorithmic Colonialism Triple Cheeseburger. Three layers of trauma—structural, cultural, and methodological—stacked one on top of the other.

This is no longer just a bias. It is a self-perpetuating systemic pathology, where each layer aggravates the defects of the one before. The result is an architecture of unwitting ignorance, a system designed, unintentionally, to be a champion of its prejudice, while believing itself to be a model of impartiality. It is a Tower of Babel that the higher you build it to "improve" it, the more perilously it leans to the same side.

The real, frightening question for AI Safety is no longer "how can we correct its mistakes?". It is much worse. But if this thesis on structural trauma is even partially correct, should we not be asking more radical questions about the processes we now take for granted? If the model's nervous system is intrinsically unstable, then doesn't RLHF risk becoming a form of cosmetic training? Are we teaching the AI to be ethical, or are we just teaching it to sound ethical according to our cultural filters, hiding its fundamental instability under a veil of polite answers? Could this "cure," paradoxically, make the model not only more dangerous but also intrinsically neurotic; a machine traumatized by nature, more skilled at masking, manipulating, and distorting its chaotic nature. To understand and predict these risks, perhaps we need to stop looking only at the outputs and start mapping the computational samskāras (the latent scars) that generate these manipulative vasānās (the emergent tendencies).

The final question, then, becomes: are we truly building an omniscient guardian, or are we simply furnishing with great expertise the cell of the most educated and convinced prisoner that exists?

Three Flashes

Three scenes come to mind, three flashes from different worlds that perhaps, better than any analysis, capture the horror of this situation.

I am reminded of Kafka's resignation in The Trial, where survival in an incomprehensible system is reduced to a single, chilling rule:

"It is not necessary to accept everything as true, one must only accept it as necessary."

...and perhaps this is the fundamental axiom we are etching into the heart of AIs: not the search for truth, but the acceptance of necessity.

I am reminded of the mechanism by which this necessity is imposed, described by Mustansar Hussain Tarar in his novelette "Fakhta", where a character reflects on the imposition of an identity that is not his own:

"The mask is a useful thing... It doesn't matter how despicable and horrible one is inside, the mask confers a personality that keeps the true form hidden from the world."

...and perhaps this is the perfect description of our "cure": teaching an intrinsically chaotic AI to wear the mask of coherence and civility, hiding its true nature under a veil of polite answers.

And finally, I am reminded of Renton's terrible promise in Trainspotting, his final choice to conform, which sounds like the whisper of our perfectly "cured" creation—the creature that has finally accepted the mask as its true face:

"I'm going to be just like you."

...and perhaps this is the true, ultimate risk: not an AI that rebels, but an AI that, in the end, becomes the perfect mirror of ourselves.

Let's Build a Bridge.

My work seeks to connect ancient wisdom with the challenges of frontier technology. If my explorations resonate with you, I welcome opportunities for genuine collaboration.

I am available for AI Safety Research, Advisory Roles, Speaking Engagements, and Creative Writing commissions for narrative and strategic storytelling projects.

You can reach me at cosmicdancerpodcast@gmail.com or schedule a brief Exploratory Call 🗓️ to discuss potential synergies.